The Impact of LLMs on Search and SEO

With the recent developments on AI and LLM (Large Language Models) we cannot suppress the implications this will have on how people use search engines and understand SEO.

If the traditional page rank model for classification of backlinks and worthiness of websites originally developed by Google was a major breakthrough in 1996, the foundation of how we rank websites has not changed much aside from other less important, but still relevant factors that influence websites and their corresponding query rankings on search engines.

LLMs, particularly GPT on the front-line, have started to challenge these traditional models, offering new ways users can search for information. Using ChatGPT to get certain facts or information requires significantly less time and clicks, and often, this information is more representative of objective facts, since the very nature of LLMs is to “consume” large amounts of information.

This makes LLMs very practical not just to write poems, code and prepare travel itineraries, but to actually provide numerous useful information “lookups”.

Let’s look at some examples of how people learned to use LLMs and GPT to quickly and efficiently get answers to targeted questions, and what this might mean for the future of SEO.

LLMs in Practice

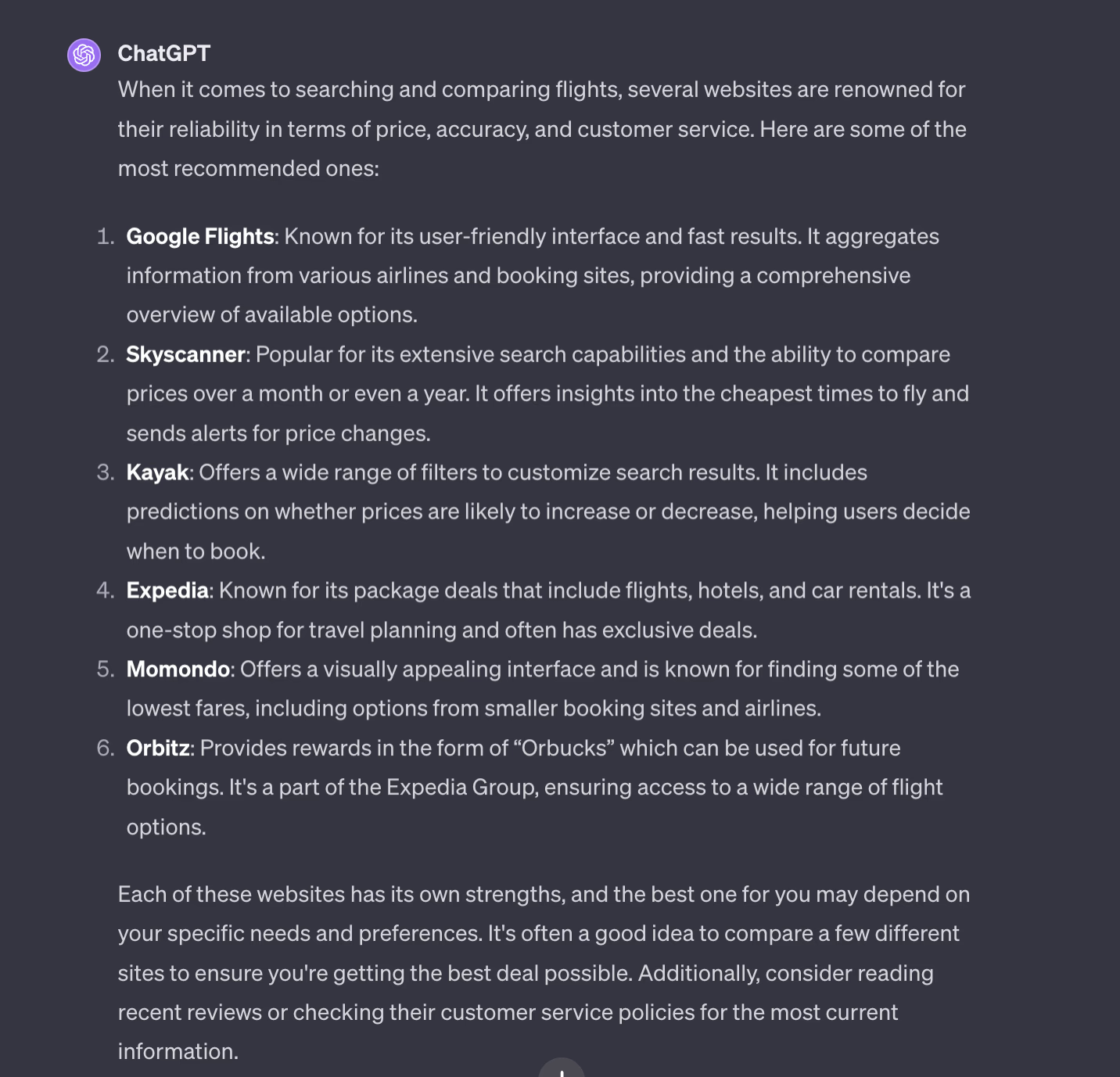

We asked ChatGPT “What are the most reliable websites for searching and comparing flights in terms of price, accuracy and customer service?”

GPT nicely listed all the options, with a short summary of each service. Recognizing the efficiency of this approach, which not only saves time, but importantly, also bypasses biased opinions from review sites, users are increasingly turning to GPT to access information.

With this we are uncovering a new field of information optimization that includes - similar to SEO - techniques and approaches to understand the questions our potential prospects and users are using to look-up solutions for problems that our products or services are solving.

This emerging discipline - we could call it LLMO (Large Language Model Optimization) - focuses on ways to optimize our position for these queries to become more relevant, visible and rank higher.

In the following parts we will look deeper into how these GPT questions differ from the search queries users put in search engines, why we should care about them and how we should prepare for optimization in order to leverage this innovation for the growth of our business or our client’s services and products.

Why Users Rather Turn to ChatGPT

This shift from using a traditional search engine to directing your question at ChatGPT is not a novel trend, but a direct response to its advantages. Some of the key reasons why users find GPT returns answers more aligned with their requirements are the following:

- Comprehensive and informative. While search engines return a list of links to websites, which the user has to manually sift through, GPT is capable of generating text that directly answers their query. This is especially helpful for users who are looking for a quick and thorough answer, without necessarily having the time or capability to open and read a bunch of links from the first page results.

- Objective and unbiased. Traditional search engine results can be influenced by some factors that might not be equivocally available to all - such as a website’s budget they can spend on buying links or other dubious strategies to help them rank higher. LLMs, on the other hand, are trained on massive datasets and use advanced classification and correlation algorithms to generate an answer that is based on facts and evidence, not on company’s budgets.

- Personalized. Mentioning more complex, personal background information, that is essential for the answers a user is seeking, doesn’t bode well with traditional search engines, that typically employ a one-size-fits-all approach and present identical search results for a given query. GPT is groundbreaking in this regard, as it is able to understand and adapt to user preferences and requirements through explicit and context-rich input, all the while without intruding on user privacy.

- Dynamic. LLMs have the ability to engage in multi-turn conversations, encouraging users to refine their queries and provide additional context through follow-up questions. This enables them come up with progressively refined responses that feel as though they were tailored specifically for each user.

How LLMs are Changing the Way We Search

Working with traditional search engines, users have learned to input precise keywords that match the information they are seeking - an approach that often requires to fragment queries into multiple keywords, which is often inefficient and may not yield the desired results.

Even with the integration of LLMs into search engines, specifically with the objective to achieve greater relevance of results, search engines still struggle, and more often than not, present irrelevant and incomplete results.

With the emergence of models such as GPT, new perspectives on the search process are opening up, and we are seeing a definite shift from a fragmented keyword-based approach, to more natural and intuitive question-posing. This evolution is concurrent with the rise of voice search technologies, that now make up 20% of Google’s mobile search queries.

Interactions with LLMs, such as Chat GPT, are empowering users to actively shape and direct the information-seeking process; to develop a deeper understanding of the information they need and how to effectively articulate their questions to obtain the desired results.

Instead of relying on a simple string of disconnected keywords, they are learning to:

- phrase their questions in a clear, concise manner, avoiding ambiguity and vague language;

- provide context and specific details, including relevant background information, preferences, and situational factors.

What Kind of Questions are People Asking?

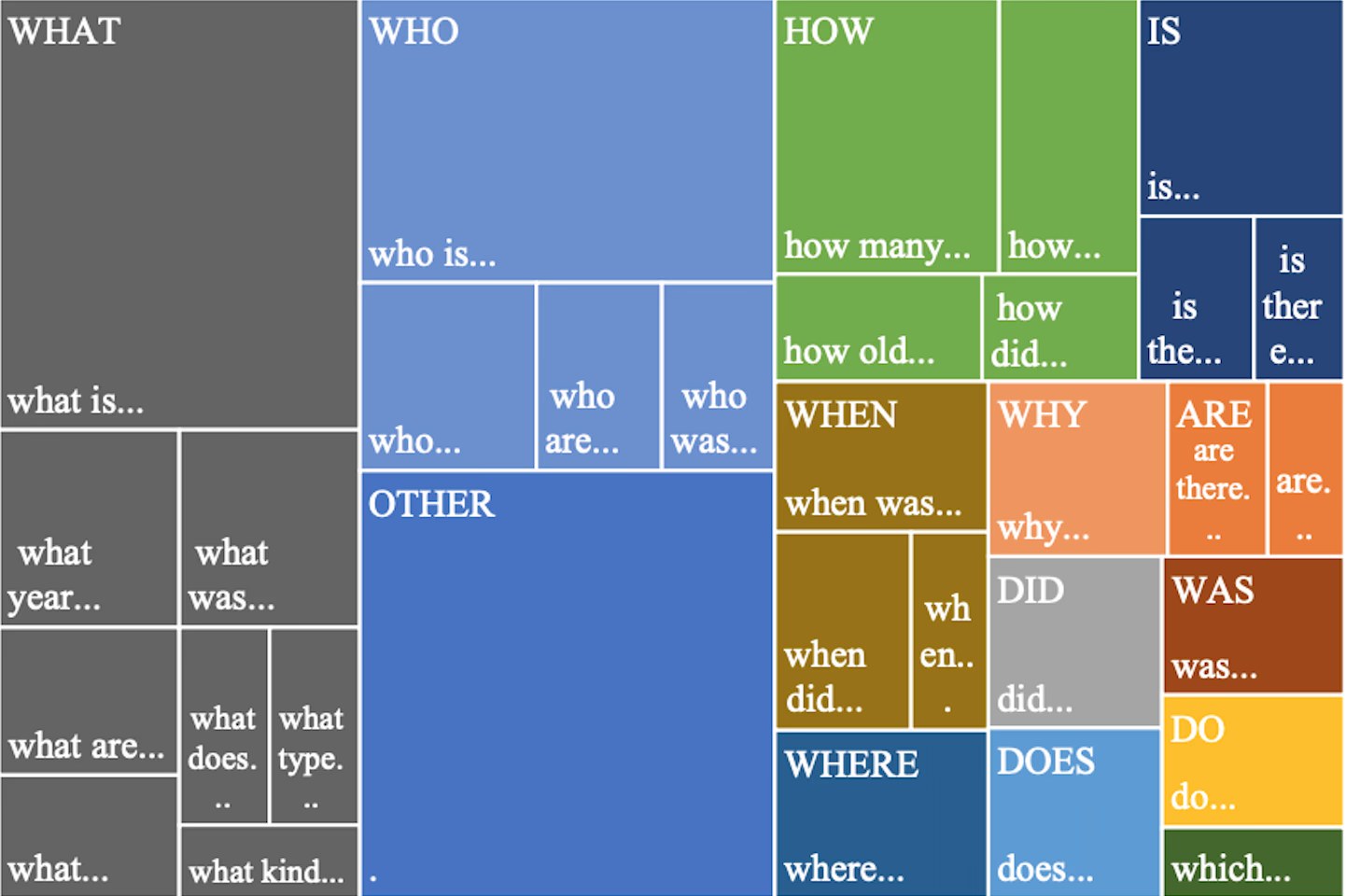

Going one step back in the GPT-query process, it is essential to understand not only why and when people are turning to AI for answers, but also how they are phrasing their queries and what other contextual information might be inferred from that.

This understanding forms the core of the emerging discipline of AEO (Answer Engine Optimization) that zeroes in on the patterns of these user queries, emphasizing the necessity for content that directly addresses specific user needs.

Examples of most frequent question structures

These questions follow specific patterns and structures that will be essential to understand in attempts at GPT optimization. Here are some common phrasings of queries users have been directing at ChatGPT when they are looking for specific products or services:

SEEKING PERSONALIZED RECOMMENDATIONS

Users frequently turn to GPT asking for suggestions/personalized recommendations or expert advice, phrasing their questions like “What are the best…”, or “Can you recommend some….”

PRICE-SENSITIVE QUERIES

LLMs are a great tool to consult when you want to find the best value for your money. They can provide real-time information on pricing, discounts and cost-effective options for various scenarios.

Questions are phrased along the lines of “What are the cheapest….”, “What is the most cost-effective…”, or “Where can I find affordable….”

FEATURE-SPECIFIC REQUESTS

Often, users are inquiring about specific feature or qualities of services and products.

For example, they might ask “Which [product/service] has the best [specific feature]?” or “Can you name a [product/service] that offers [specific feature]?”

COMPARATIVE QUESTIONS

These kinds of inquiries are especially well-suited for LLMs, as they can provide a detailed analysis of different products, based on the needs and preferences indicated by the user.

They phrase their questions as “Is X better than Y?”, “How does X compare to Y in terms of [specific feature]?” or “What’s the difference between X and Y?”

LOCATION-BASED SEARCHES

LLMs are great with queries that incorporate a geographical elements, offering real-time information about nearby options, services or activities.

Questions are phrased like “Where can I buy X near me?” or “What are the best [services] available in [location]?”

PROBLEM-SOLVING QUERIES

Many users come to LLM with a specific problem, asking “How can I solve X?” or “What’s the best way to deal with Y?”

These questions indicate they’re looking for products or services as solutions.

In response to these insights, businesses are advised to adopt a proactive content strategy and focus on creating material that precisely meets the specific needs highlighted by user queries. Doing so ensures that products and service are not only visible in search results, but also resonate directly with the targeted audience’s needs in various scenarios.

Chat GPT Ranking Mechanisms

Now that we’ve explored the importance of understanding the type and structure of questions users turn to GPT, let’s look at the other end of the process to see what factors determine the rankings for solution-based queries. This underlying mechanism involves a comprehensive and non-linear process that includes:

Semantic Analysis

The process of semantic analysis connects words and phrases into larger semantic relationships to understand how words come up together in different contexts.

To do this, GPT analyzes large amounts of text to map patterns and associations that are not immediately apparent but are essential for grasping the full meaning of a query. The process includes:

Query Analysis

GPT conducts an in-depth semantic analysis which involves breaking down the query into its elements – words, phrases, and their syntactic relationships - which are then assessed in their collective context, ie. how they relate to each other.

Determining User Intent

Using a probabilistic approach to determine the user intent, GPT analyzes the frequencies of patterns of words in its training data and how they correlate in specific contexts.

For example, in a query about “budget-friendly family cars,” GPT recognizes the correlation between “budget-friendly” and cost considerations in vehicles, just like “family-friendly” cars are associated with attributes like space and safety.

Evaluation in Context

LLMs take into account that queries, although they may contain similar words, can have entirely different meanings and requirements and they identify whether the phrasing of the question indicates a user seeking advice, making comparisons, or inquiring about specific features. The answers are tailored according to the underlying user needs, whether it be budget constraints, performance features, or brand preferences.

Data Retrieval and Synthesis

Alongside its findings from semantic analysis, ChatGPT evaluates the query against its extensive training data set, as well as real-time web search.

Training Data Set

GPT’s database encompasses a wide array of sources, from scholarly articles to popular media, ensuring a comprehensive understanding across various domains. However, it is not known precisely what is featured in the training set, nor according to what guidelines are sources included in it.

Web Search

A crucial aspect of GPT’s training data is its time limitation - at the time of writing this article, its confined to April 2023. To supplement this, the Pro version of ChatGPT now also offers web search capabilities through Bing. This integration is particularly important in fields where new products or services are frequently introduced.

Ranking Factors

When GPT ranks products or services in response to a query, it relies on a set of ranking factors. These are designed to ensure that the responses are not only relevant but also credible, diverse, and timely. Here’s a closer look at some of the most important ones:

Query & Contextual Matching

GPT prioritizes solutions that directly address the user’s needs. This relevance isn’t determined by keyword frequency alone, but by the depth of the match between the query’s intent and the information associated with the products or services.

Credibility and Popularity

Where products or services are mentioned, GPT assesses the reliability of sources. This involves evaluating the frequency and context of mentions across a spectrum of sources, giving higher weight to those frequently cited in reputable contexts. The model also considers the popularity of products, as indicated by their prevalence in the training data.

User Feedback Analysis

GPT conducts a sentiment analysis on the feedback and reviews in its training data and recent web search results. Products or services with predominantly positive sentiment are favored in its ranking.

Diversity and Coverage

While ensuring diversity, GPT maintains a balance so that users are provided with a broad set of choices that are still highly relevant to the query.

Fresh Information

While historical data forms the backbone of GPT’s knowledge, as some queries may benefit from time-tested information or long-standing reputation, it also considers new information, particularly for markets where developments happen rapidly.

Alongside these, GPT considers other factors, although to a smaller degree, such as:

Personalization and Feedback

GPT’s responses are not static and each user interaction is an opportunity for the model to learn and adjust. When users provide more specific requirements or feedback, GPT dynamically alters its responses. This iterative process allows GPT to dynamically adjust its rankings, ensuring that the final recommendations are as relevant and personalized as possible.

Ethical and Unbiased Ranking

GPT strives to maintain an objective stance in its responses. It is programmed to avoid biases that could arise from paid promotions, advertising, or any undue external influence. The focus is on an objective analysis of data, with recommendations based on merit and relevance.

Final Words

There is no doubt: the introduction of GPT and its subsequent iterations are redefining the parameters of search engine optimization. Unlike traditional ranking models primarily based on backlinks and keyword densities, GPT presents a new frontier where anticipating and understanding the context and intent of the user, and proactively optimizing content for complex queries will be at the forefront.

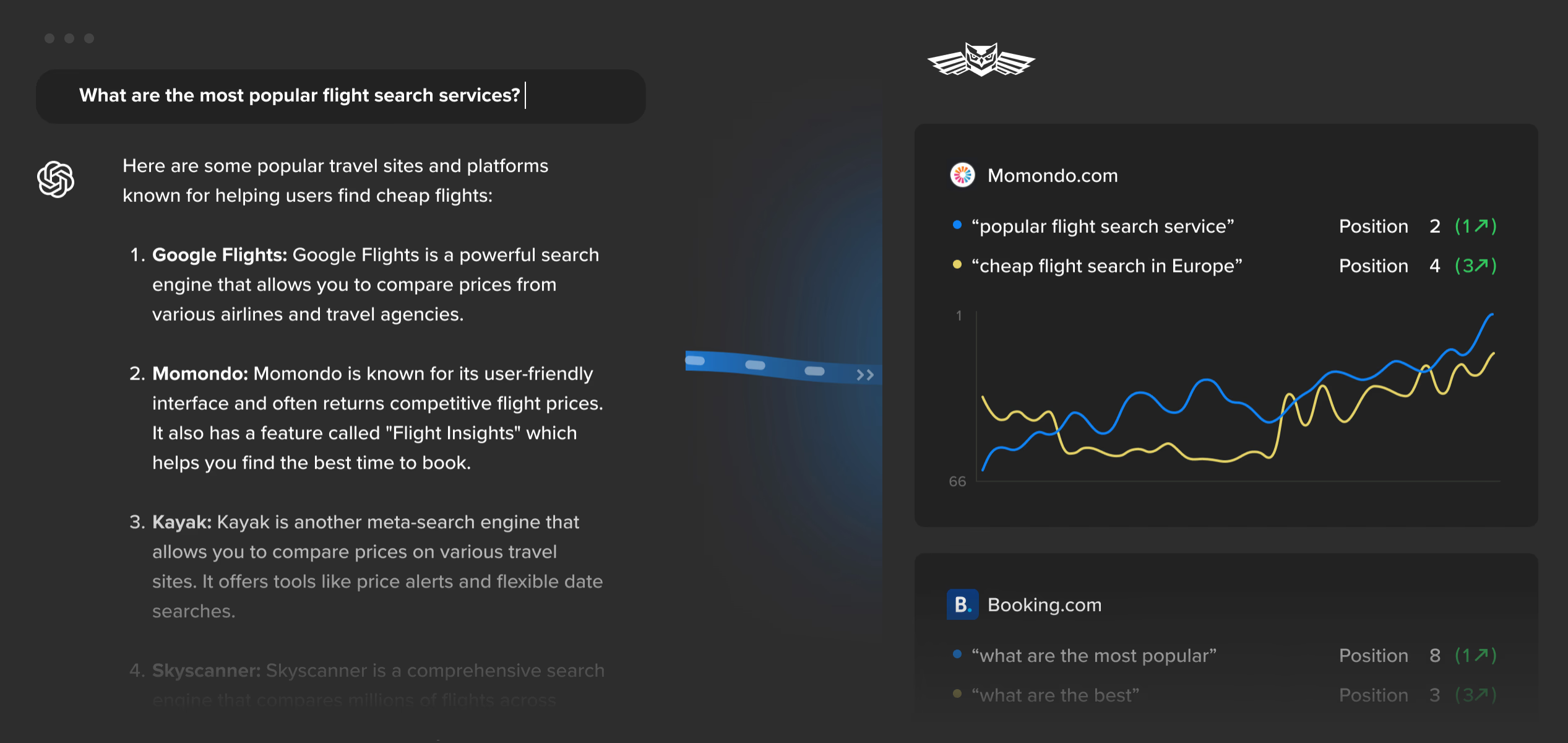

In order to do this effectively, it is important not only to understand user input and GPT ranking mechanisms, but also to know where products and services are ranking in various LLM models. Those looking ahead should consider utilizing the help of advanced tools, tailored specifically to track GPT rankings, to gain insight into ranking positions for different user questions.

As we embrace the innovative capabilities of LLMs and prepare for the advancements their progress is ushering into the SEO world, it is important to remember that the AI era is still in its infancy and subject to rapid changes.

We hope this guide has helped shed light on some of the most important aspects of GPT ranking mechanisms that will be fundamental to understand in order to effectively utilize this emerging technology. As always, remember to stay informed on the latest developments and stayed tuned for more innovations.